-

According to the National Highway Administration of the United States, 90% of traffic accidents[1] are caused by driver factors. In the analysis of accident causes by Treat et al.[2], road factors accounted for 34.9%, vehicle factors only accounted for 9.1% and driver factors reached as high as 92.9%. Drivers with subjective initiative are the direct participants and decision-makers in the operation of the transportation system. The rationality of its decision-making and the correctness of drivers’ behavior are key to the safe and efficient operation of the transportation system. In 1963, Williams[3] discovered a trance-like state similar to hypnosis that drivers experience on long and monotonous roads. He termed this state 'highway hypnosis', which leads to reduced awareness and lack of attention, posing potential safety risks. In 1970, Williams & Shor[4] described highway hypnosis as a hypnosis-like state characterized by reduced alertness and drowsiness, in which drivers are unable to adequately respond to changes in road conditions. This state is a syndrome that affects the ability of humans to perform several psychological functions. Shor & Thackray[5] believed that highway hypnosis refers to the tendency of drivers to become drowsy or fall asleep on monotonous and uninteresting highways. They noted that anyone required to perform continuous and repetitive tracking or monitoring tasks under monotonous conditions could be affected by this hypnosis-like state. Brown[6] further explained that even when drivers maintained the correct sitting posture kept their eyes on the road ahead, and had their hands on the steering wheel, they could still experience a hypnosis-like state. In 2004, Cerezuela et al.[7] conducted experiments based on the hypothesis of highway hypnosis proposed by Williams in 1963. They found that drivers are more likely to experience highway hypnosis when driving on monotonous highways compared to traditional roads.

Wang et al. conducted exploratory research on road hypnosis[8,9]. Road hypnosis was defined as an unconscious driving state resulting from the combined effects of external environmental factors and the psychological state of the driver. It was proposed that road hypnosis arose due to repetitive and low-frequency stimuli present in highly predictable driving environments. This state was characterized by sensory numbness, decreased attention, and reduced alertness, and was accompanied by transient states such as trance, amnesia, and fantasies. Road hypnosis could be induced by multiple factors, including endogenous factors (such as the driver's susceptibility to hypnosis, fatigue, and circadian rhythms) and exogenous factors (such as road geometry, monotony of the driving task, monotony of the driving situation, and vehicle enclosure). Once a driver emerged from the state of road hypnosis, they typically experienced a noticeable alert state. While drivers often did not remember events that occurred during the road hypnosis state, they had a clear memory of the trance-like state they just experienced. In this state, drivers might appear to maintain normal driving behavior, but their reaction times were significantly slower than in a normal driving state. To identify road hypnosis, Wang designed virtual and vehicle driving experiments to collect electrocardiogram (ECG) and electromyogram (EMG) signals, integrating the collected data to establish a model for recognizing road hypnosis.

Driving is a complex process in which drivers need to process real-time information obtained from the road environment, including the perception of road information (Situation Awareness), judgment of obtained information (Information Process), decision making, and performance of action stages. During the driving process, drivers need to perceive information to form an understanding of the surrounding environment, and attention resources need to be reasonably allocated to various driving tasks and possible nondriving tasks. The most important sensory organ is the visual organ. Research on the visual attention features of drivers is of great significance for improving the perception and cognitive level of intelligent driving vehicles. The decision-making mechanism of driving behavior can be revealed with the study. In recent years, representative studies on driver visual attention features have been as follows: Konstantopoulos et al.[10] studied the eye movements of driving school coaches and students driving through three virtual routes (day, night, and rainy days) in a driving simulator. The research results indicated that driving experience played an important role in adjusting eye movement strategies during the driving process. Wang et al.[11] developed a driver's attention focus tracking system, demonstrating that drivers optimize overall driving performance by dynamically adjusting attention allocation. Deng et al.[12] found that the driver's attention was focused on the end of the road in front of the vehicle. The driver’s attention area could be effectively simulated with the bottom-up and top-down combination traffic flow significance detection model. Hills et al.[13] explored the adverse effects of vertical eye movement on dynamic switching of driving tasks. The results showed that horizontal eye movement in drivers could identify hazards faster and more accurately, while vertical eye movement only had no significant negative impact on experienced drivers. Palazzi et al.[14] explored the distribution of drivers' visual attention through a computer vision model based on a multi-branch deep architecture. It was indicated from the results that there were several common attention patterns among different drivers. Barlow et al.[15] evaluated the impact of drones on road traffic safety by determining whether the driver's visual attention can be distracted with the flight near the road. The results showed that drones flying near the roadside or in rural environments would attract the visual attention of nearby road drivers. Young et al.[16] used natural driving data to examine the nature of visual or manual secondary task interruptions in drivers. They found that drivers had differences in the number of interruptions. They could withstand to secondary tasks with different characteristics, including task duration and visual load. Secondary tasks with longer duration and higher visual load were more likely to be interrupted. Kimura et al.[17] evaluated the allocation of attention resources in driver visual, cognitive, and action processing. When driving at high speeds, the total amount of attention resources allocated to visual, cognitive, and motor processing by drivers would significantly increase. When driving on narrow roads, the amount of attention resources allocated to visual processing by drivers increases, while the amount of attention resources allocated to cognitive processing decreases. The amount of resources allocated to action processing remained unchanged, while the total amount of attention resources remained almost unchanged. Long et al.[18] proposed a lane-changing behavior recognition algorithm based on the similarity of driver's visual scanning behavior during vehicle driving. The algorithm could effectively recognize drivers' lane-keeping, left lane changing, and right lane changing behaviors with considerations of time efficiency, accuracy, and interpretability. The recognition ability was not inferior to neural network models based on long-term and short-term memory.

The physiological indicators of drivers, with very important and far-reaching significance in driving behavior research, have shown excellent performance in identifying driving behaviors, especially in the study of driving fatigue and driving distraction. Habibifar & Salmanzadeh[19] used four physiological signals to identify negative emotions in drivers, including ECG, EMG, electrodermal (EDA), and electroencephalogram (EEG). The identification results of negative emotions obtained by using ECG and EDA signals showed greater accuracy. Wiberg et al.[20] collected physiological characteristics data of nine drivers under long-term and repetitive urban and highway scenes. They studied their moderate mental load during natural driving. Hu[21] collected the fuzzy entropy, sample entropy, and spectral entropy of the driver's EEG signal through a driving simulator. These three EEG feature vectors were input into the Adaboost classifier. The results indicated that fuzzy entropy had the greatest contribution to the fatigue identification model. However, there was similarity between feature parameters and the performance of the model had not been verified in actual driving environments. Du et al.[22] used a local feedback fuzzy neural network to process EEG signals. The temporal and spatial information of the EEG signals were captured and processed, which was input into a convolutional recursive fuzzy network to identify the driver's fatigue. The experimental results showed that the accuracy was 88%. Zhang et al.[23] used clustering algorithms to collect ECG spatial nodes from different driver EEG signals. Different degrees of fatigue were identified with pulse-coupled neural networks. The model had good application prospects for driver fatigue identification. However, it was not validated on test samples. The model is also not evaluated. Awais et al.[24] collected time-domain and frequency-domain features from EEG, the heart rate and heart rate variability parameters from ECG. The important features of the two were fused into the SVM model. The validation results showed that the proposed algorithm had an accuracy of 93.3% for fatigue identification. Murugan et al.[25] classified fatigue into four categories: drowsiness, inattention, fatigue, and cognitive inattention. Feature parameters were collected such as driver heart rate, and heart rate variability with electrocardiogram sensors, and train the selected features with SVM, KNN, and ensemble algorithms. The experimental results showed that the model performed well in classifying a single fatigue state. However, the identification accuracy of the integrated algorithm for four classification fatigue detection was only 58.3%. Ramos et al.[26] collected nine main features from electromyography signals and heart rate variability to construct an SVM classifier to identify driving fatigue. Barua et al.[27] used multi-channel active electrodes to obtain the driver's EEG and EOG signals. They trained the feature parameters through four identification algorithms, which are KNN, SVM, random forest and case-based reasoning. The model was evaluated with KSS (Karolinska Sleepiness Scale). The results showed that the SVM model was the best. The accuracy rate of the two categories of fatigue identification reached more than 93%. Mårtensson et al.[28] used the portable digital recording system to collect the driver's EEG, ECG and EOG signals. The sequence floating forward selection algorithm was used to reduce the dimension of the feature parameters. The feature parameters after feature selection were trained through the random forest model. The verification results showed that the accuracy of model identification could reach 94.1%. However, the model performed poorly on the real data set. Wang et al.[29] used sensors on the driver's seat to collect the driver's electrocardiogram and electromyography signals. A multiple linear regression classifier was trained to identify the driver's fatigue. The results showed that the accuracy reaches 91%.

In previous studies, eye movement parameters and bioelectricity parameters were used to establish two different road hypnosis identification models. In the study by Shi et al.[8], electrocardiogram features were used to observe and capture road hypnotic in experiments to obtain eye movement feature parameters under that state. Principal component analysis was used to preprocess the experimental data. A road hypnosis identification model was established based on eye movement parameters using the LSTM algorithm. The accuracy rates were 93.27% and 97.01% on the vehicle and virtual driving experimental datasets, respectively. In the study by Wang et al.[9], eye movement characteristics were used to observe and capture the road hypnosis in the experiment to obtain the bioelectricity characteristic parameters under the state. Electrocardiogram (ECG) and electromyogram (EMG) signals were selected as characteristic parameters for identifying road hypnotics. The experimental ECG and EMG signals were preprocessed using high-order spectral feature methods and the preprocessed ECG and EMG signals were fused using principal component analysis. KNN algorithm was used to establish a road hypnosis identification model, achieving accuracy rates of 97.06% and 98.84% on both vehicle and virtual experimental datasets, respectively.

In this study, a road hypnosis identification model for drivers is established using various feature parameters, which are fused with ensemble learning methods. The existence of road hypnosis can be better proved by using multiple feature parameters to identify road hypnosis. Typical monotonous scenes, including tunnel scenes and highway scenes, are selected for designing virtual driving experiments and vehicle driving experiments, respectively. Eye movement feature data and bioelectricity feature data of vehicle drivers are collected in monotonous scenes such as tunnels or highways. The LSTM algorithm is used to train eye movement data preprocessed by principal component analysis to obtain LSTM-based learners. The KNN algorithm is used to train KNN-based learners from ECG and EMG data that were preprocessed by high-order spectral feature methods and fused through principal component analysis. The SVM algorithm is used to train data predicted by LSTM based learners and KNN-based learners. A road hypnosis identification model for drivers is established. It is indicated from the results of virtual driving experiments and vehicle driving experiments that the road hypnosis can be accurately identified with the model based on the stacking ensemble learning method.

The contribution of this paper is as follows:

1. Multiple feature parameters including eye movement features and bioelectrical features are used to achieve a multi-dimensional analysis of road hypnosis states.

2. The stacking ensemble learning method is employed in this study. The identification performance is improved with this method.

3. Road hypnosis is proven to exist during driving based on vehicle driving experiments. The identification of road hypnosis is achieved in vehicle driving experiments. The experimental results are supplemented and enriched through virtual driving experiments on this basis. Finally, the identification of road hypnosis in vehicle driving is realized.

-

The experimental equipment used includes eye trackers, human factor equipment, portable computers, cameras, etc. An eye tracker was used to collect driver's eye movement information. Human factor equipment was used to obtain bioelectricity characteristic information of drivers. The camera was used to record the entire experimental process.

In the virtual driving experiment, a driving simulation system consisting of a Logitech G29 driving kit, six degrees of freedom platform, UC-win/Road software, and three 55-in triple displays are required. The virtual driving experimental environment is shown in Fig. 1.

An SUV vehicle is used in the driving experiment with an inverter provided for electrical needs. The experimental environment is shown in Fig. 2.

Experimental participants

-

There were a total of 50 participants in the experiment, with a male-to-female ratio of 8:2. Participants in the experiment were required to have a maximum of 600 degrees of myopia. The distribution of age and driving experience information for the drivers are shown in Table 1.

Table 1. Basic information of experimental participants.

Range No. of participants Age (year) 25−35 26 36−45 18 46−55 6 Driving experience (year) 1−5 11 5−10 18 10−22 21 The virtual driving experiment was conducted in a laboratory environment with a driving simulator. The safety of the experiment is guaranteed. Therefore, a fast highway was chosen as the experimental scene in the virtual driving experiment. A bidirectional four-lane expressway with a length of 40 km and a width of 15 m were generated in the simulation software. There are no added curves throughout the road, all of which are straight sections. Trees and grass have been added to both sides of the road according to a fixed proportion. The driver performs driving tasks back and forth in this scene until the end of the experiment. The reason why tunnel scenes were not selected in the virtual driving experiment is that the tunnel environment displayed on the screen in the laboratory is more likely to cause visual fatigue for drivers compared to the highway environment, which can cause interference with the experimental results.

The vehicle driving experiment poses certain risks due to the need to drive vehicles on actual roads, especially on fast-moving highways. Once an accident occurs, it is easy to cause serious consequences and difficult to ensure the safety of the experiment. Therefore, the urban road sections through tunnels were selected as the experimental scene in the vehicle driving experiment Qingdao Binhai Highway (Qingdao, China) was selected for the experiment. Qingdao University of Science and Technology Laoshan Campus was chosen as the start point. Shandong University Qingdao Campus was chosen as the end point. China Ocean University Laoshan Campus was chosen as the intermediate point. The total length of the road was 36 km, with a speed limit of 80 km/h. There was a monotonous environment on both sides of the road. The Yangkou Tunnel, which passes through the road, has a total length of 7.76 km and six lanes in both directions. It is divided into two tunnels, with a left line length of 3.875 km and a right line length of 3.888 km. The single tunnel was 14.8 m wide and 8 m high.

Experimental process

-

The virtual driving experiment process was as follows:

Participants in the experiment had sufficient sleep to eliminate interference caused by fatigue. The experiment started at 9:00 am on weekdays and ended at 11:00 am. In addition to the experimental participants, three colleagues from the laboratory participated in the experimental work. Before the experiment, one colleague needed to debug the experimental equipment, and two colleagues assisted the participant in wearing the experimental equipment. The entire experiment was recorded through video recording. During the experiment, the driver needed to drive at a speed of 120 km/h and in a single lane for 20 min. An experimental assistant observed the driver's eye movements and electrocardiogram characteristics. When the driver's gaze was focused on the front and the electrocardiogram signal remained stable, the assistant recorded this time period. Especially when the driver may experience an alert state, an experimental assistant proactively asked the driver if he (she) had just experienced road hypnosis and recorded it at this point. After the single driving process, the experimental assistant assisted the participants in removing the equipment. The experimental participant had a 10 min break. An experimental assistant immediately asked the participant if he (or she) had experienced any abnormal driving behaviors such as fatigue, distraction, etc. during the recent driving process and recorded them. The driver could recall whether or not he or she has road hypnosis states through the experimental video and the corresponding eye movement and bioelectricity. It can be recorded by the experimental assistant. After this process is completed, the driving experiment is restarted. The experimental process remained consistent, and the experimental duration was extended to 40 min. After the driving process was completed, an experimental assistant organized the equipment and the experiment was ended.

The vehicle driving experiment process is as follows:

Due to traffic congestion and other issues during peak hours in the morning, the vehicle driving experiment was chosen to start at 10:00 am and ended at 12:00 noon. In addition to the experimental participants, three colleagues from the laboratory participated in the experimental work. The most important thing in vehicle driving experiments is to ensure driving safety. Any experiment should be conducted under the premise of ensuring safety. Before the experiment, one colleague needed to debug the experimental equipment, and two colleagues assisted the experimental personnel in wearing the experimental equipment. The start section of the experiment was from the Laoshan Campus of Qingdao University of Science and Technology to the Laoshan Campus of Ocean University of China. The road conditions in this section are relatively complex, and the driver maintained natural driving during this section to be familiar with the experimental equipment and vehicle driving environment. After arriving at the Laoshan Campus of Ocean University of China, the driver took a break and continued the driving process. The driver was required to avoid overtaking or lane changing behavior while driving without affecting safe driving. Driver’s attention may be distracted by frequent overtaking or lane-changing behaviors. An experimental assistant observed the driver's eye movements and electrocardiogram characteristics. The assistant recorded the time period during which the driver’s gaze was focused ahead and the electrocardiogram signal remained stable. When the driver may have experienced an alert state, the assistant proactively asked the driver if he (or she) had just experienced road hypnosis and recorded it. After arriving at the Qingdao campus of Shandong University, the experimental assistant assisted the participant in removing the equipment. The participant had a rest time of 20 min. An experimental assistant immediately asked the participant if he (she) had experienced any abnormal driving behaviors such as fatigue, distraction, etc. during the recent driving process and recorded them. The driver could recall whether or not he or she had road hypnosis states through the experimental video and the corresponding eye movement and bioelectricity and recorded it. After this process was completed, the driving experiment was continued from Shandong University Qingdao Campus to Qingdao University of Science and Technology Laoshan Campus. The experimental process remained consistent. After arriving at the destination, an experimental assistant organized the equipment and ended the experiment.

-

After organizing and classifying the experimental data, 50 sets of vehicle driving experimental data and 50 sets of virtual driving experimental data were obtained. The experimental data with typical road hypnosis characterization phenomena was manually screened by colleagues with relevant research experience for the construction of a road hypnosis dataset. Ten minutes of experimental data from each set of experimental data was selected. Because road hypnosis is a state that repeatedly appears and disappears within a certain period of time, the 10 min of data selected was not an entirely continuous driving period. Similarly, data with no abnormal driving status for 10 min was selected as the normal driving dataset. After the preliminary screening was completed, the expert scoring method was used to score the two types of datasets obtained. The effectiveness of the dataset was evaluated through video playback. The final score was confirmed. During the vehicle driving experiment, nine participants experience fatigue due to physical exhaustion, uncomfortable sitting posture, and other reasons. Finally, 35 sets of valid video data were obtained as the vehicle driving experimental dataset, including 25 sets of normal driving and 10 sets of road hypnosis data. In the virtual driving experiment, 43 sets of valid video data were selected, including 27 sets of normal driving and 16 sets of road hypnosis data.

Methodology

-

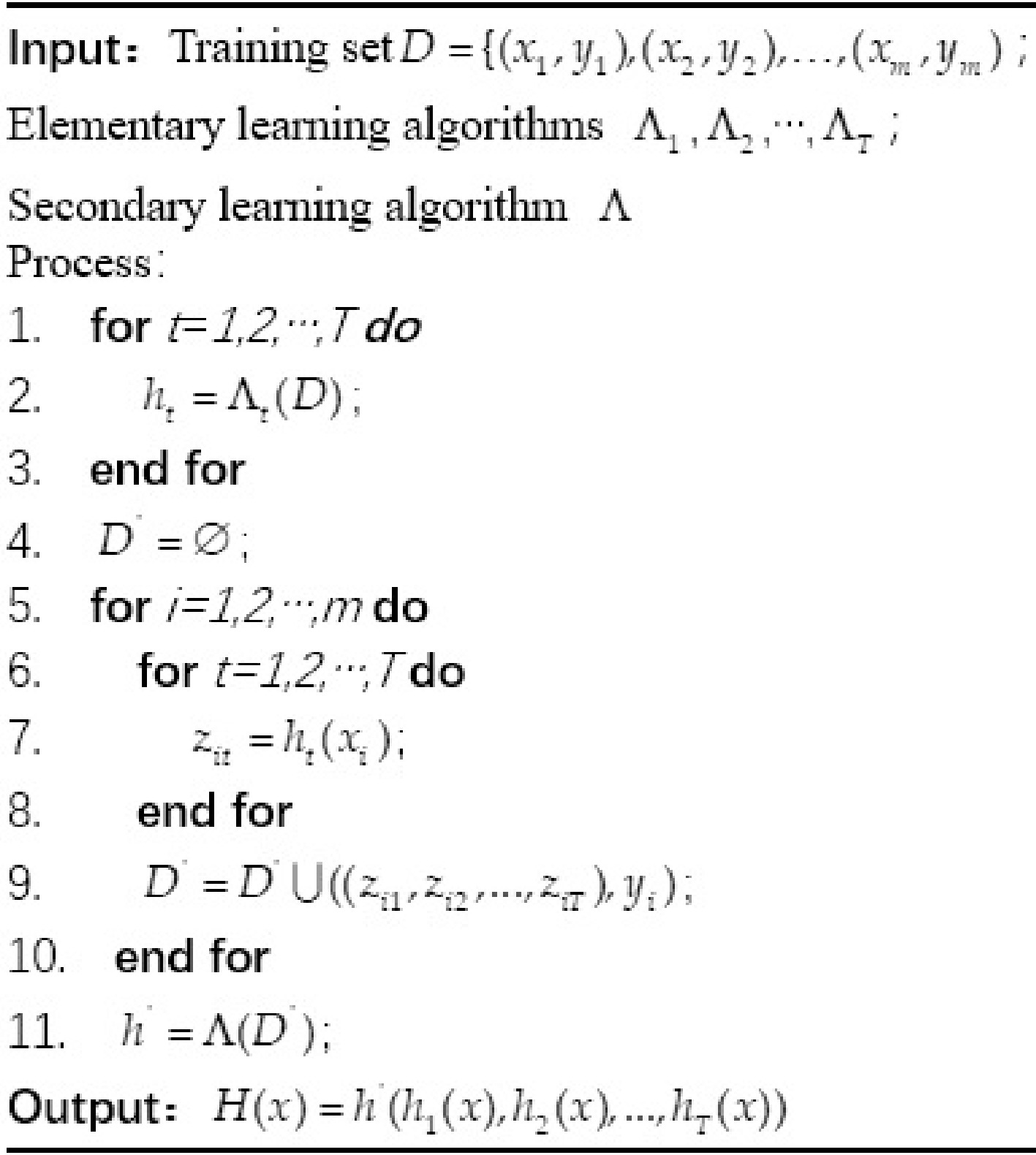

The ensemble learning method used in this study is the Stacking method, which improves the overall performance of the model by combining multiple different base learners. Firstly, the LSTM and KNN-base learners are trained separately to obtain their predictions on the input data. Then, these prediction results are used as features and input into the SVM model, which is trained to obtain the final ensemble model. By leveraging the strengths of both the LSTM and KNN base learners, the SVM meta-learner can more accurately identify the state of road hypnosis.

LSTM (Long Short-Term Memory) is a special type of Recurrent Neural Network (RNN) suitable for processing and predicting time series data. LSTM addresses the long-term dependency problem in traditional RNNs by introducing three gates: the forget gate, the input gate, and the output gate. In this study, LSTM is used to process and analyze the eye movement characteristics of drivers to capture their dynamic changes during driving. The preprocessed eye movement data is input into the LSTM model for training, thus obtaining the LSTM base learner for road hypnosis recognition.

KNN (k-Nearest Neighbors) is an instance-based non-parametric classification method. It predicts the class of a sample by calculating the distance between the samples to be classified and each sample in the training set, and then selecting the class of the k-nearest samples. In this study, the KNN algorithm is used to process and analyze the bioelectric characteristic data of drivers, such as electrocardiogram (ECG) and electromyogram (EMG) signals. By preprocessing the high-order spectral features and combining them with Principal Component Analysis (PCA), the KNN base learner for road hypnosis recognition is constructed in this study.

SVM (Support Vector Machine) is a supervised learning model primarily used for classification and regression analysis. SVM classifies samples of different classes by finding an optimal decision boundary in a high-dimensional space. In this study, SVM is used as the meta-learner to perform ensemble learning on the predictions of the LSTM and KNN base learners. By using the outputs of the LSTM and KNN-base learners as inputs, SVM can better integrate the information from both, thereby improving the accuracy of road hypnosis recognition.

In our previous study[8], a road hypnotic identification model is established by using eye movement data. Principal Component Analysis (PCA) is selected to preprocess eye movement feature data. Eye tracker acquisition parameters and their meanings are shown in Table 2. Descriptive statistical analysis of eye movement data in normal driving state and road hypnosis are shown in Tables 3 & 4.

Table 2. Eye tracker acquisition parameters and their meanings.

Type Name Explanation Pupil information Pupil Diameter Left (mm) Left eye pupil diameter Pupil Diameter Right (mm) Right eye pupil diameter IPD (mm) Interpupillary distance Gaze Point X (px) Original fixation x-coordinate in pixels Gaze Point Y (px) Original fixation y-coordinate in pixels Gaze Point Right X (px) X-coordinate of the original gaze point of the right eye Gaze Point Right Y (px) Y-coordinate of the original gaze point of the right eye Gaze Point Left X (px) X-coordinate of the original gaze point of the left eye Gaze Point Left Y (px) Y-coordinate of the original gaze point of the left eye Fixation Point X (px) The x-coordinate of the gaze point in pixels Fixation Point Y (px) The y-coordinate of the gaze point in pixels Fixation Duration (ms) Gaze duration Saccade information Saccade Single Velocity (px/ms) Average saccade velocity per frame Saccade Velocity Peak (px/ms) Velocity peaks during saccades Blink information Blink Index Blink Index Blink Duration (ms) Duration of blink Blink Eye Blink label: 0 for double wink; 1 for left wink; 2 for right wink Table 3. Descriptive statistical analysis of eye movement data in normal driving state.

Name N Minimum value Maximum value Average value Standard deviation Variance Saccade Velocity Average (px/ms) 68292 0.500 1.306 0.712 0.168 0.028 Pupil Diameter Left (mm) 68292 3.041 4.275 3.751 0.211 0.045 Pupil Diameter Right (mm) 68292 −1.534 4.232 3.677 0.413 0.170 IPD (mm) 68292 66.345 67.618 67.004 0.161 0.026 Gaze Point X (px) 68292 330.646 842.180 632.310 62.291 3880.145 Gaze Point Y (px) 68292 176.772 380.967 295.534 26.781 717.240 Gaze Point Left X (px) 68292 362.979 866.104 636.314 64.265 4129.937 Gaze Point Left Y (px) 68292 180.498 633.565 390.844 80.357 6457.305 Gaze Point Right X (px) 68292 298.318 829.718 627.969 66.181 4379.910 Gaze Point Right Y (px) 68292 −157.189 512.873 204.823 106.666 11377.556 Gaze Origin Left X (mm) 68292 −5.497 −4.155 −4.778 0.175 0.031 Gaze Origin Left Y (mm) 68292 −7.894 −5.095 −5.804 0.361 0.130 Gaze Origin Left Z (mm) 68292 −35.869 −33.335 −34.054 0.283 0.080 Gaze Origin Right X (mm) 68292 4.728 5.333 5.104 0.119 0.014 Gaze Origin Right Y (mm) 68292 −4.630 −2.530 −3.332 0.301 0.091 Gaze Origin Right Z (mm) 68292 −32.927 −31.464 −32.051 0.293 0.086 Fixation Duration (ms) 68292 66.000 90966.000 23206.851 22645.982 512840520.244 Fixation Point X (px) 68292 402.268 851.052 625.487 54.279 2946.158 Fixation Point Y (px) 68292 125.196 343.138 299.244 14.902 222.064 N is the sample size. Table 4. Descriptive statistical analysis of eye movement data in road hypnosis.

Name N Minimum value Maximum value Average value Standard deviation Variance Saccade Velocity Average (px/ms) 42775 0.00001 0.500 0.174 0.146 0.021 Pupil Diameter Left (mm) 42775 2.791 4.301 3.629 0.231 0.053 Pupil Diameter Right (mm) 42775 −1.307 4.323 3.636 0.277 0.077 IPD (mm) 42775 66.149 67.536 67.017 0.125 0.016 Gaze Point X (px) 42775 320.779 855.683 603.739 56.414 3182.571 Gaze Point Y (px) 42775 160.197 393.530 302.320 20.799 432.589 Gaze Point Left X (px) 42775 355.348 856.234 616.640 59.496 3539.833 Gaze Point Left Y (px) 42775 165.291 655.587 386.817 71.875 5165.952 Gaze Point Right X (px) 42775 236.304 854.082 590.594 57.633 3321.547 Gaze Point Right Y (px) 42775 −186.220 513.097 218.809 84.647 7165.129 Gaze Origin Left X (mm) 42775 −5.450 −4.140 −4.871 0.170 0.029 Gaze Origin Left Y (mm) 42775 −7.917 −4.972 −5.682 0.347 0.120 Gaze Origin Left Z (mm) 42775 −35.888 −33.288 −34.064 0.265 0.070 Gaze Origin Right X (mm) 42775 4.548 5.374 5.127 0.100 0.010 Gaze Origin Right Y (mm) 42775 −4.590 −2.518 −3.304 0.234 0.055 Gaze Origin Right Z (mm) 42775 −32.966 −31.306 −31.926 0.248 0.062 Fixation Duration (ms) 42775 167.000 90966 40057.15 30047.406 902846577 Fixation Point X (px) 42775 402.268 851.052 608.402 39.981 1598.472 Fixation Point Y (px) 42775 59.117 408.848 302.290 12.971 168.255 N is the sample size. Finally, six and five principal components are obtained after processing virtual experimental data and vehicle driving experimental data, respectively. Then, the preprocessed data is trained using the LSTM network to establish road hypnosis identification models based on the vehicle driving experiment and the virtual driving experiment. The accuracy rates of the two models are 93.27% and 97.01%, respectively. The principal component analysis method is selected to process the eye movement feature data. After processing the final virtual experiment data and vehicle driving experiment data, 6 and 5 principal components are also obtained, respectively. The principal component comprehensive models of the virtual experiment and vehicle experiment are shown in Eqns (1) and (2), respectively.

$ C = \sum\limits_{n = 1}^6 {\dfrac{{{\lambda _n}}}{{\displaystyle\sum\limits_{i = 1}^6 {{\lambda _i}} }}} \times {C_n} $ (1) $ C = \sum\limits_{n = 1}^5 {\dfrac{{{\lambda _n}}}{{\displaystyle\sum\limits_{i = 1}^5 {{\lambda _i}} }}} \times {C_n} $ (2) where, C is the principal component. Cn is the nth principal component coefficient. λn is the n principal component eigenvalue. λi is the i principal component eigenvalue.

The LSTM network consists of three gates, which are forget gate, input gate, and output gate. Their calculation formulas are shown in Eqns (3), (4), and (5), respectively.

$ {f_t} = \sigma ({W_f} \cdot [{h_{t - 1}},{x_t}] + {b_f}) $ (3) $ \begin{gathered} {\tilde C _t} = \tanh ({W_C} \cdot [{h_{t - 1}},{x_t}] + {b_C}) \\ {i_t} = \sigma ({W_i} \cdot [{h_{t - 1}},{x_t}] + {b_i}) \\ \end{gathered} $ (4) $ {C_t} = {f_t} * {C_{t - 1}} + {i_t} * {\tilde C _t} $ (5) where, ft is forget gate.

$\sigma $ ${\tilde C\ _t}$ ${\tilde C _t}$ The LSTM network is used to train the data preprocessed by principal component analysis. Road hypnosis identification models are established with virtual experimental datasets and vehicle driving experimental datasets, respectively.

In another study, bioelectricity data is used to establish a road hypnosis identification model. When preprocessing bioelectricity data, the method of high-order spectral features is chosen to process the ECG and EMG data obtained from the experiment. The processed ECG and EMG data can be further fused through principal component analysis. Bioelectricity characteristic parameters and their meanings are shown in Table 5. A descriptive statistical analysis of bioelectricity characteristic parameters are shown in Table 6.

Table 5. Bioelectricity characteristic parameters and their meanings.

Name Explanation ECG Signal (mV) ECG signal amplitude in millivolts Heart Rate (bpm) Heart rate in beats per minute R-R Interval (ms) Interval between consecutive R-waves SDNN (ms) Standard deviation of NN intervals Table 6. Descriptive statistical analysis of bioelectricity characteristic parameters.

State Minimum value Maximum value Average

valueStandard

deviationVariance Skewness Kurtosis Value Value Value Standard error Value Value Value Standard error Value Standard error Road hypnosis −1827.865 2595.328 −0.017 1.026 332.738 110714.259 2.961 0.008 19.341 0.015 Normal state −1672.206 2353.899 0.092 2.018 310.955 96692.828 3.071 0.016 20.910 0.032 Linear Discriminant Analysis (LDA), Quadratic Discriminant Analysis (QDA), and KNN algorithm are used to train and classify the preprocessed data. Road hypnosis identification models based on virtual driving experiments and vehicle driving experiments are trained, respectively. The accuracy of the two models trained using the KNN algorithm is the highest, with 99.84% and 97.06%. The high-order spectral feature method is used to preprocess the ECG and EMG data collected in the experiment. Among them, the calculation formula of the first order moment to the third order moment is similar, as follows:

$ {m_1} = E\left[ X \right] $ (6) $ {m_2}\left( {{\tau _1}} \right) = E\left[ {X\left( K \right) \cdot X\left( {K + {\tau _1}} \right)} \right] = {c_2}\left( {{\tau _1}} \right) $ (7) $ {m_3}\left( {{\tau _1},{\tau _2}} \right) = E\left[ {X\left( K \right) \cdot X\left( {K + {\tau _1}} \right) \cdot X\left( {K + {\tau _2}} \right)} \right] = {c_3}\left( {{\tau _1},{\tau _2}} \right) $ (8) The calculation method of the fourth-order moment is different from the first three orders. The calculation formula is as follows:

$ {m_4}\left( {{\tau _1},{\tau _2},{\tau _3}} \right) = E\left[ {X\left( K \right) \cdot X\left( {K + {\tau _1}} \right) \cdot X\left( {K + {\tau _2}} \right) \cdot X\left( {K + {\tau _3}} \right)} \right] $ (9) $ \begin{split} m_4\left(\tau_1,\tau_2,\tau_3\right)=\; & c_4\left(\tau_1,\tau_2,\tau_3\right)+c_2\left(\tau_1\right)\cdot c_2\left(\tau_3-\tau_2\right)+ \\ &c_2\left(\tau_2\right)\cdot c_2\left(\tau_3-\tau_1\right)+c_2\left(\tau_3\right)\cdot c_2\left(\tau_2-\tau_1\right) \end{split} $ (10) In this study, features are collected from the bispectrum of the signal and obtained the sum of the logarithmic amplitudes of the bispectrum (S1). The sum of the logarithmic amplitudes of the diagonal elements of the bispectrum (S2). The first-order spectral moment of the diagonal element amplitude of the bispectrum (S3), as follows:

$ {S_1} = \sum\limits_\Omega {\log \left( {\left| {B\left( {{f_1},{f_2}} \right)} \right|} \right)} $ (11) $ {S_2} = \sum\limits_\Omega {\log \left( {\left| {B\left( {{f_k},{f_k}} \right)} \right|} \right)} $ (12) $ {S_3} = \sum\limits_{k = 1}^N {k\log \left( {\left| {B\left( {{f_k},{f_k}} \right)} \right|} \right)} $ (13) where, B(f1, f2) is the Fourier transform of the third-order cumulate at frequencies f1 and f2. k is the counting variable

The preprocessed ECG and EMG data can be fused with principal component analysis method. The principal components are calculated by determining the eigenvectors and eigenvalues of the covariance matrix. The covariance matrix is used to measure the degree of change between dimensions relative to the mean. The covariance of two random variables is the trend of their fusion, calculated as follows:

$ {\rm{cov}} \left( {X:Y} \right) = \sum\limits_{i = 1}^N {\dfrac{{\left( {{x_i} - \overline x } \right)\left( {{y_i} - \overline y } \right)}}{N}} $ (14) where, xi is the i-th observation of the variable X.

${y_i}$ $\overline x $ $\overline y $ The preprocessed data is trained with the KNN algorithm. Road hypnosis identification models can be established with virtual experimental datasets and vehicle driving experimental datasets, respectively.

Road hypnosis identification model

-

The purpose of this study is to identify road hypnosis by integrating the eye movement characteristics and bioelectricity characteristics of drivers. Therefore, the Stacking method in ensemble learning is chosen to establish a road hypnosis identification model. The Stacking algorithm is a heterogeneous integration method based on multiple different base learners. For road hypnosis, first-level learners based on eye movement feature data and bioelectricity feature data can be constructed respectively. A second-level learner can be constructed based on the prediction results of the two first-level learners, to achieve the complementary effect of the two first-level learners. It can effectively reduce the error caused by factors such as overfitting. Among them, the first-level learner is called the base learner, and the second-level learner is called the meta learner. The algorithm calculation process is shown in Fig. 3.

In this study, the LSTM model and the KNN model as two basic learners are selected to learn the eye movement feature data and bioelectricity feature data collected in the experiments. The SVM model is used as the meta-learner. Whether it’s vehicle driving experimental data or virtual experimental data, the same processing method is used. When training the base learner, the experimental data is divided into a training set and a testing set using k-fold cross-validation to train the base learner. The value of k is 5, and 80% of the data is selected as the training set each time. The remaining 20% of the data is used as the test set. The eye movement data is trained with the LSTM algorithm to obtain the LSTM-based learners. The ECG and EMG data are trained with the KNN algorithm to obtain KNN-based learners. The SVM algorithm is used to train data predicted with the LSTM-based learners and KNN-based learners. A road hypnosis identification model is established. The algorithm principle of the SVM model is as follows:

(1) The appropriate kernel function is K(x, z) and penalty function is C > 0. The convex quadratic programming problem can be constructed and solved with Eqn (15):

$ f(x) = \mathop {\min }\limits_\alpha \dfrac{1}{2}\sum\limits_{i = 1}^N {\sum\limits_{j = 1}^N {{\alpha _i}} } {\alpha _j}{y_i}{y_j}K({x_i},{x_j}) - \sum\limits_{i = 1}^N {{\alpha _i}} $ (15) where, αi and αj are the Lagrange multiplier of SVM. yi and yj are the sample label. K(xi, xj)

$ K({x_i},{x_j}) $ $ \sum\limits_{i = 1}^N {{\alpha _i}} $ (2) The component

$\alpha _j^*$ $0 < \alpha _j^* < C$ $ f(x) = sign\left(\sum\limits_1^N {\alpha _i^*} {y_i}K(x,{x_i}) + {b^*}\right) $ (16) $ {b^*} = {y_j} - \sum\limits_{i = 1}^N {\alpha _i^*} {y_i}K({x_i} \cdot {x_j}) $ (17) where, αi is the Lagrange multiplier of SVM. yi and yj are the sample label. K(x, xi) is the kernel function. b* is the bias term.

(3) When the kernel function is the commonly used Gaussian kernel function

$K(x,z) = \exp ( - \dfrac{{{{\left\| {x - z} \right\|}^2}}}{{2{\sigma ^2}}})$ $ f(x) = sign\left(\sum\limits_{i = 1}^N {\alpha _i^*} {y_i}\exp \left( - \dfrac{{{{\left\| {x - z} \right\|}^2}}}{{2{\sigma ^2}}}\right) + {b^*}\right) $ (18) where,

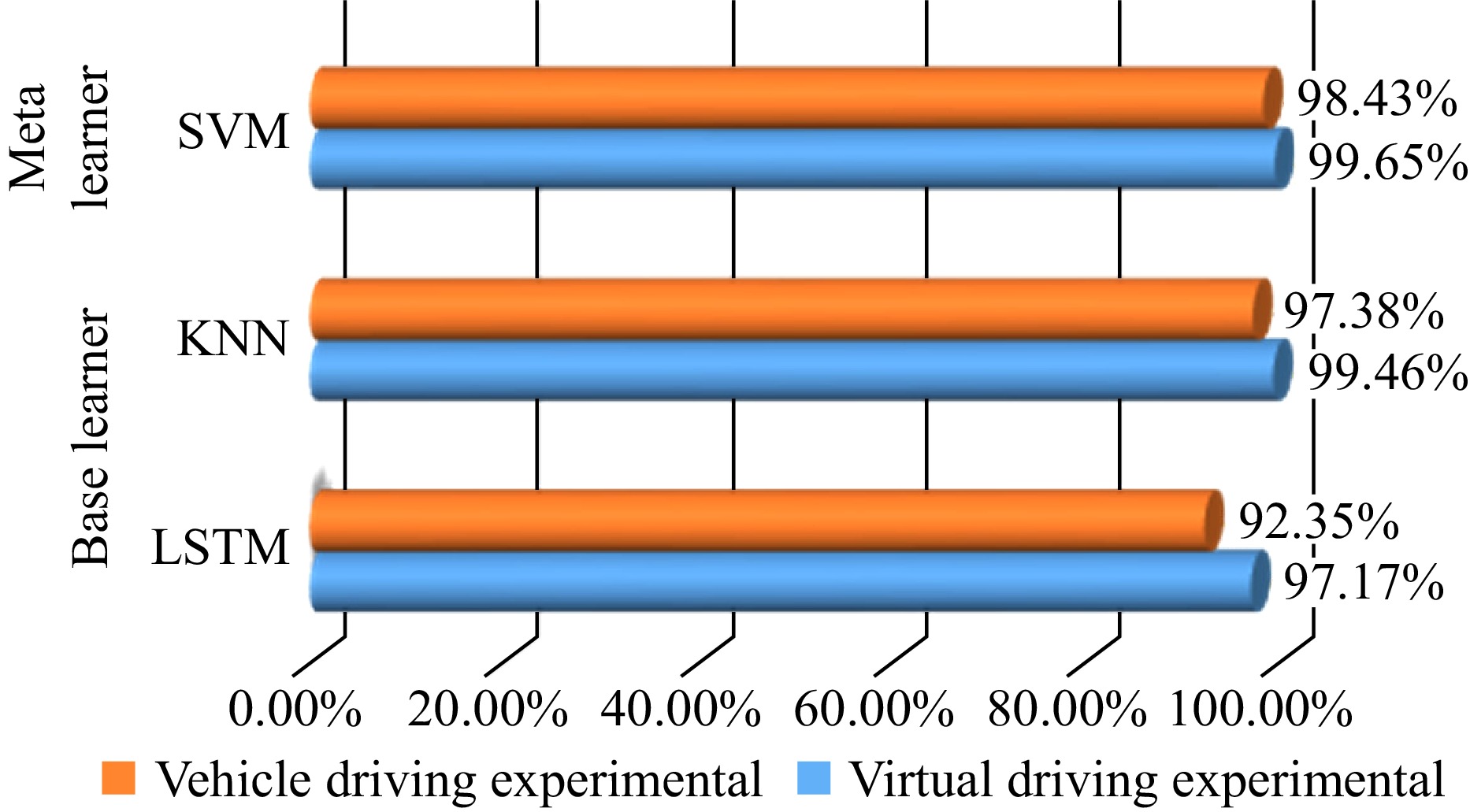

$\alpha _i^*$ $\exp ( - \dfrac{{{{\left\| {x - z} \right\|}^2}}}{{2{\sigma ^2}}})$ The classification results are shown in Fig. 4.

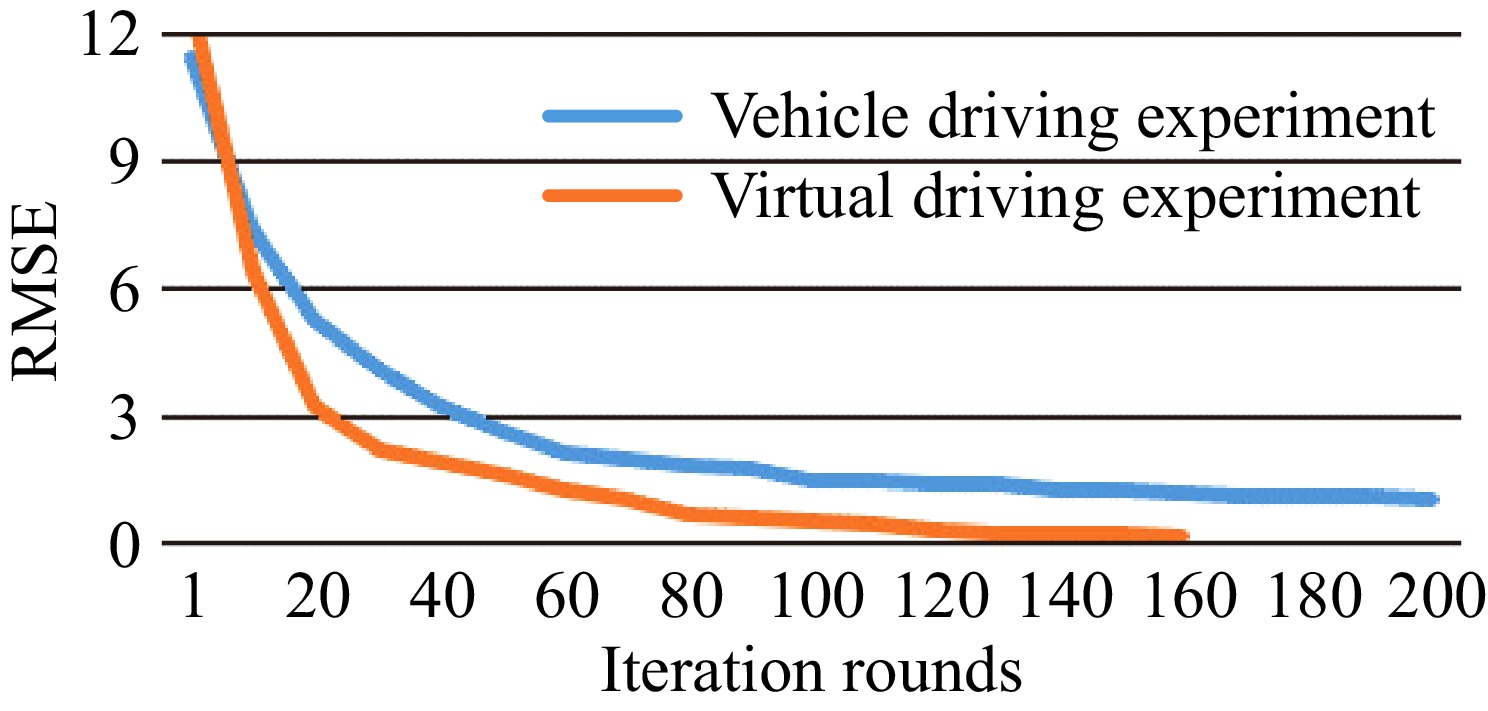

To evaluate the performance of the fused model, Root Mean Square Error (RMSE) is used to evaluate the performance of the trained model. RMSE represents the sample standard deviation of the difference between predicted and observed values, which can indicate the degree of dispersion of the sample. The calculation is as follows:

$ RMSE = \sqrt {\dfrac{1}{n}\sum\limits_{i = 1}^n {{{\left| {{{\hat y}_i} - {y_i}} \right|}^2}} } $ (19) where, RMSE is root-mean-square error. yi is true value.

$ {\hat y_i} $ -

As shown in Fig. 4, the accuracy of the obtained model has been further improved by fusing the two base learner models using a meta-learner. To further validate the performance improvement brought about by integrating two models using ensemble learning methods, the root mean square error (RMSE) is introduced to observe the degree of performance improvement of the model. From Fig. 5, when the iteration reaches around 180 rounds, the root mean square error of the model does not change. It can already converge well in the training of vehicle driving experimental data. In the training of virtual experimental data, when the iteration reaches about 120 rounds, the root meant square error of the model remains unchanged. It indicates that the fused model can more accurately identify road hypnosis. Its performance has further improvements compared to models with a single type. Compared to vehicle driving experiments, models trained with virtual driving experimental data converge faster. This is consistent with the conclusions of previous studies. Similarly, due to the more controllable experimental conditions of virtual driving and less irrelevant interference, such as pedestrian and vehicle interference during driving, the stimulation effect of road hypnosis is better. The virtual driving scene has more typical road hypnosis characteristics. However, the results of vehicle driving experiments can also show that in the actual driving environment, road hypnosis is existing and can be identified through the model established by the fusion of eye movement feature parameters and bioelectricity feature parameters.

In previous studies, eye movement parameters and bioelectricity parameters are used to establish road hypnosis identification models. In the study by Kimura et al.[17], electrocardiogram features are used to observe and capture the road hypnosis in experiments to obtain eye movement feature parameters under that state. In this study, eye movement features are used to observe and capture the road hypnosis in the experiment to obtain the bioelectricity feature data of road hypnosis. The difference is the experimental process. The characteristic parameters of road hypnosis are not only determined through a single feature but also through the driver's eye movement data and bioelectricity data. The data in this period of time for the identification of road hypnosis can be collected by observing the characteristics of the driver's eyes focusing on the center of the road in front and the stable ECG during driving. The impact of cognitive distractions or other simple interfering factors can be avoided through the improved experimental process. For example, when a driver is stimulated during normal driving, they will also experience an alert state. If the level of stimulation is low or the driver has strong psychological qualities, the external performance characteristics of the driver may not be obvious. It can easily be mistaken for road hypnosis. Compared to the experimental methods in previous studies, the proposed method can more accurately collect the characteristic parameters of road hypnosis. The accuracy of road hypnosis identification can be further improved.

In this study, the eye movement data and bioelectricity data are fused to identify road hypnosis. It is not an occasional abnormal driving state, but a common driving behavior in our daily lives. It is a state of external manifestation similar to cognitive distraction. However, the two states are different in the perspective of definition and internal mechanism. If the driver's cognitive resources are not focused on the main driving task, it is considered a cognitive distraction. Although the driver's cognitive resources are not focused on the main driving task in road hypnosis, they are also not occupied by other specific tasks. Therefore, whether in virtual driving experiments or vehicle driving experiments, the method of using secondary tasks to induce road hypnosis is not adopted. As a result, it is difficult to completely distinguish between road hypnosis and cognitive distraction. The experiment under natural driving conditions is conducted without other human interference to make the experimental participants drive the vehicle naturally in the environment prone to road hypnosis. Through observing the driver's eye movement characteristics and bioelectricity characteristics during the driving process, the initiative is taken to ask questions to determine the occurrence of road hypnosis. Therefore, the existence of cognitive distraction is excluded as much as possible through the experimental method. Participants are required to have a good rest before the experiment. Immediately after the end of each experiment, the driver is asked if he or she has experienced fatigue or distraction. Some data that has been affected by fatigue or distraction is removed from the dataset. Therefore, in both experimental methods and data processing, the interference caused by driving fatigue and distraction has been effectively eliminated.

There is also something to be improved in this study:

(1) In the experimental process, drivers do not need to complete specific tasks to maintain a natural driving state. However, the active inquiry process used to determine the occurrence of road hypnosis can inevitably cause some interference. It can be attempted to select obvious external features of road hypnosis to determine its occurrence, which can avoid interference caused by active questioning.

(2) The typical monotonic scenes are selected as experimental scenarios to induce road hypnosis and obtain characteristic data of road hypnosis. However, road hypnosis does not only appear in monotonous scenes. It appears more often in highly predictable environments during daily driving. For example, a driver is prone to fall into road hypnosis in their familiar driving environment, which is not monotonous. A reasonable and highly predictable environment for experiments can be selected to train a road hypnosis identification model with more general applicability and stronger generalization ability.

(3) Two different types of data are integrated through ensemble learning. The fused data is collected from the same experimental method, either virtual driving experiments or vehicle driving experiments. At present, the data from the virtual driving experiment and the vehicle driving experiment have not been integrated. This is because the driving experience of drivers in the experimental process and experimental scenarios are different. In subsequent research, the virtual driving experiment and the vehicle driving experiment with identical experimental conditions should be designed. The closed highway sections can be applied for vehicle driving experimental verification.

-

In this paper, the vehicle driving experiment and the virtual driving experiment are designed to collect eye movement feature data and bioelectricity feature data of drivers in monotonous scenes, such as tunnels or highways. A road hypnosis identification model based on ensemble learning is proposed. The main work includes the following aspects:

(1) The vehicle driving experiment and the virtual driving experiment are designed and organized. The eye movement characteristics and bioelectricity characteristics data of 50 experimental participants are collected. Combined with the playback of experimental videos, experimental data is screened with the expert scoring method. Road hypnosis databases for the vehicle driving experiment and the virtual driving experiment are constructed. It can be used for the analysis of feature parameters, calibration, and training of identification models.

(2) The Stacking ensemble learning method for integrating eye movement features and bioelectricity features is introduced. LSTM base-learner is trained with the eye movement data preprocessed through principal component analysis. KNN base-learner is trained with ECG and EMG data preprocessed through high order spectral feature method. The data predicted by two base learners can be used to establish a road hypnosis identification model.

(3) A road hypnosis identification model is trained by the SVM algorithm with the data predicted by LSTM base learner and KNN base learner. To verify the effectiveness and accuracy of the fusion model, RMSE is selected as the evaluation indicator. The results indicate that the road hypnosis identification model based on Stacking ensemble learning method proposed in this paper can accurately identify the road hypnosis in the virtual driving experiment and the vehicle driving experiment.

The existence of road hypnosis and the feasibility of identifying road hypnosis through multiple feature parameters have been further demonstrated in this research. It can provide more selectable methods and technical support for real-time and accurate identification of road hypnosis. It is of great significance for improving and enriching driving assistance systems, as well as enhancing the intelligent and active safety performance of vehicles.

-

The authors confirm contribution to the paper as follows: study conception and design: Wang X, Wang J; data collection: Wang X, Wang J, Chen L, Wang B, Han J, Feng K, Wang G, Shi H; analysis and interpretation of results: Wang X, Wang J, Chen L, Wang B, Shi H; draft manuscript preparation: Wang X, Wang J, Chen L, Zhang H. All authors reviewed the results and approved the final version of the manuscript.

-

The data that support the findings of this study are available on request from the corresponding author, Wang X.

This research is supported by the New Generation of Information Technology Innovation Project of China University Innovation Fund of Ministry of Education (Grant No. 2022IT191), the Qingdao Top Talent Program of Innovation and Entrepreneurship (Grant No. 19-3-2-8-zhc), the project 'Research and Development of Key Technologies and Systems for Unmanned Navigation of Coastal Ships' of the National Key Research and Development Program (Grant No. 2018YFB1601500), the General Project of Natural Science Foundation of Shandong Province of China (Grant No. ZR2020MF082), Shandong Intelligent Green Manufacturing Technology and Equipment Collaborative Innovation Center (Grant No. IGSD-2020-012).

-

The authors declare that they have no conflict of interest. Xiaoyuan Wang is the Editorial Board member of Digital Transportation and Safety who was blinded from reviewing or making decisions on the manuscript. The article was subject to the journal's standard procedures, with peer-review handled independently of this Editorial Board member and the research groups.

- Copyright: © 2024 by the author(s). Published by Maximum Academic Press, Fayetteville, GA. This article is an open access article distributed under Creative Commons Attribution License (CC BY 4.0), visit https://creativecommons.org/licenses/by/4.0/.

-

About this article

Cite this article

Chen L, Wang J, Wang X, Wang B, Zhang H, et al. 2024. A road hypnosis identification method for drivers based on fusion of biological characteristics. Digital Transportation and Safety 3(3): 144−154 doi: 10.48130/dts-0024-0013

A road hypnosis identification method for drivers based on fusion of biological characteristics

- Received: 18 April 2024

- Revised: 01 August 2024

- Accepted: 03 August 2024

- Published online: 30 September 2024

Abstract: Risky driving behaviors, such as driving fatigue and distraction have recently received more attention. There is also much research about driving styles, driving emotions, older drivers, drugged driving, DUI (driving under the influence), and DWI (driving while intoxicated). Road hypnosis is a special behavior significantly impacting traffic safety. However, there is little research on this phenomenon. Road hypnosis, as an unconscious state, is can frequently occur while driving, particularly in highly predictable, monotonous, and familiar environments. In this paper, vehicle and virtual driving experiments are designed to collect the biological characteristics including eye movement and bioelectric parameters. Typical scenes in tunnels and highways are used as experimental scenes. LSTM (Long Short-Term Memory) and KNN (K-Nearest Neighbor) are employed as the base learners, while SVM (Support Vector Machine) serves as the meta-learner. A road hypnosis identification model is proposed based on ensemble learning, which integrates bioelectric and eye movement characteristics. The proposed model has good identification performance, as seen from the experimental results. In this study, alternative methods and technical support are provided for real-time and accurate identification of road hypnosis.

-

Key words:

- Road hypnosis /

- State identification /

- Active safety /

- Drivers /

- Intelligent vehicles