-

The growing focus on health has driven consumers to demand higher food quality. A recent cross-national consumer survey in Europe has revealed that the physical appearance of food, including color, size, and shape, are the most important factors when consumers evaluate food quality, accounting for 52.7% of 797 survey participants[1]. Specifically, freshness is one of the most frequently cited quality cues based on physical appearance. In addition, chemical properties, such as nutritional components, play a critical role in determining overall food quality, as they often lead to noticeable changes in physical attributes[2,3].

Food industries find conventional quality assessment methods not ideal because these methods are time-consuming, laborious, and/or destructive[4,5]. In the current practice, multiple approaches are typically performed to comprehensively analyze food quality indicators, which prolongs the overall analysis time and increases labor demands. For example, total soluble solids and titratable acidity are commonly measured in fruits using Brix measurement and titration, while total volatile basic nitrogen (TVBN) can be measured as a key seafood quality indicator using titration or gas chromatography[6,7]. Colorimetric analysis measures the color attributes of food. Texture analyzers measure fruit firmness by puncturing, providing an indication of ripeness[8]. It is also common to manually determine food quality using criteria cards, such as those used for evaluating beef marbling grades, which may generate relatively subjective results due to human interpretation. To resolve these technical challenges, optical sensors have been recently utilized for rapid and non-destructive quality assessment. The use of RGB imaging and spectroscopy, such as near-infrared (NIR) spectroscopy, Raman spectroscopy, and hyperspectral imaging (HSI), have been commonly studied[9]. Despite their advantage of rapid data acquisition, these methods face challenges in data feature extraction and interpretation due to the vast amounts of complex information they generate[10].

Machine learning extracts critical features from data and offers accurate solutions, enabling users to interpret complex datasets through efficient algorithms[11]. Machine learning is commonly applied for tasks such as classification and regression. The food industry has recently started leveraging the advantages of machine learning to analyze data collected from optical sensors for food quality assessment, as well as in automation systems for pre- and post-harvest processing[12]. By integrating machine learning with optical sensors, food industries can minimize subjective decisions based on human visual assessment and achieve rapid, accurate quality evaluation. Furthermore, the computer vision generated by optical sensors and machine learning could guide robotics for consistent control of food quality while reducing repetitive tasks for humans[13].

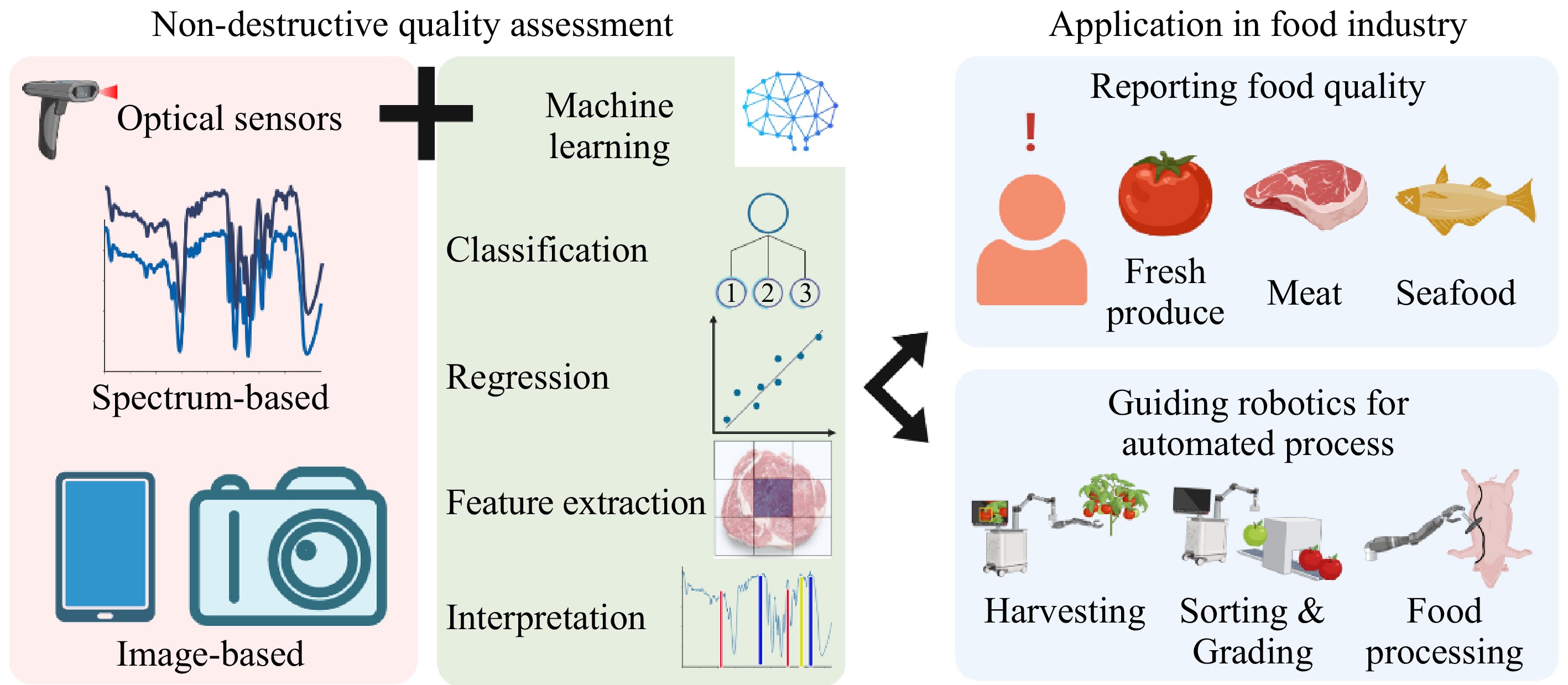

This mini-review provides a focused perspective on the application of machine learning, optical sensors, and robotics in pre- and post-harvest food processing for quality assessment and control. The workflow is summarized in Fig. 1. Representative studies published in the last 5 years are discussed to demonstrate the application of this novel interdisciplinary technology across various food industries, including fruit and vegetables, meat, and seafood. The food types, types of optical sensors, machine learning models, and key results are summarized in Table 1. The advantages of using these techniques include but are not limited to i) the implementation of non-destructive methods for real-time analysis and waste reduction; ii) decreased reliance on human labor; and iii) adaptability to various food industries across various food products.

Figure 1.

Overview of non-destructive quality assessment using optical sensors and machine learning to report food quality and guide robotics for the automated process. This figure was created using BioRender.

Table 1. Applications of optical sensors and machine learning in food quality assessment for reporting food quality and guiding robotics-driven food processing.

Food Quality indicators Type of optical

sensorsaMachine learning models Results (R2 or accuracy) Ref. Feature extraction Classification/Regression Application #1: Food quality assessment Spinach and Chinese cabbage Storage time based on color and textile HSI camera Gradient-weighted class activation mapping (Grad-CAM) [Classification]

Logistic regression (LR), support vector machine (SVM), random forest (RF)

, one dimensional-convolutional neural network (1D-CNN), AlexNet, long short-term memory (LSTM)Accuracies for spinach:

LR: 75%, SVM: 80.36%, RF: 51.79%, AlexNet: 82.14%, ResNet: 78.57%, LSTM: 82.14%, CNN: 82.14%, CNN-LSTM: 85.17%

Accuracies for Chinese cabbage:

LR: 83.93%, SVM: 82.14%, RF: 64.29%, AlexNet: 78.57%, ResNet: 71.43%, LSTM: 78.57%, CNN: 82.14%, CNN-LSTM: 83.93%[18] Apples Total soluble solids contents NIR spectrometer Automatic encoder neural network [Regression] Partial least square (PLS) R2: 0.820 – 0.953 [19] Beef Thawing loss, water content, color Raman spectrometer Ant colony optimization (ACO), uninformative variable elimination (UVE), competitive adaptive reweighted sampling (CARS) [Regression] PLS R2 for PLS: 0.374 – 0.775

ACO-PLS: 0.479 – 0.793

UVE-PLS: 0.928 – 0.994

CARS-PLS: 0.609 – 0.971[27] Beef Muscle segmentation, marbling Structured-illumination reflectance image camera Unet++, DeepLabv3+, SegFormer, ResNeXt101 [Classification] Regularized linear discriminant analysis Accuracy for whole image: 77.45% – 84.47%;

Segmented image: 83.19% – 88.72%

Selected feature in segmented image: 90.85%[29] Seabass fillet Storage days that are determined based on TVB-N and protein Raman spectrometer ANOVA [Classification] SVM, CNN Accuracy for whole spectrum: SVM: 81.4%, CNN: 86.2%;

Accuracy for selected spectral features: SVM: 83.8%, CNN: 90.6%[36] Fish Storage days that are determined based on fisheye Smartphone camera VGG19, SqueezeNet [Classification] LR, SVM, RF, k-nearest neighbor (kNN), artificial neural network (ANN) Accuracy for VGG19 extraction with LR: 65.9%, SVM: 74.3%, RF: 68.3%, kNN: 67.8%, ANN: 77.3%

Accuracy for SqueezeNet extraction with

LR: 64.0%, SVM: 57.6%, RF: 64.5%, kNN: 63.0%, ANN: 72.9%[38] Application #2: Pre- and post-harvest processing with robotics Strawberry Maturity RGB camera YOLOv4 [Classification] YOLOv4 Success rate for harvesting in lab: 60.00 – 100, in fields: 37.50 – 94.00 [42] Apple Maturity RGB camera, laser camera YOLOv3 [Classification] YOLOv3 Success rate for harvesting in fields: 65.2% – 85.4% [44] Winter jujube Maturity RGB camera YOLOv3 [Classification] YOLOv3 Success rate for grading: 97.28% [45] Pork Cutting point RGB camera U-Net [Classification] U-Net Performance for object detection:

Mean average precision: 0.963 – 0.984, mean average recall: 0.974 – 0.994[47] Pork Cutting and grasping point CT camera U-Net, mask-region-based convolutional neural network (Mask-RCNN), ICNet [Classification] U-Net, Mask-RCNN, ICNet Accuracy for object detection:

U-Net: 0.904, Mask-RCNN: 0.920, ICNet: 0.979[48] Pork loin Cutting, slicing, trimming RGB camera, proximity sensor [Classification] RF Accuracy for contact detection: 90.43% – 98.14%, approach detection: > 99.9% [50] a Full name of optical sensors: hyperspectral imaging (HSI), near-infrared (NIR), computed tomography (CT). -

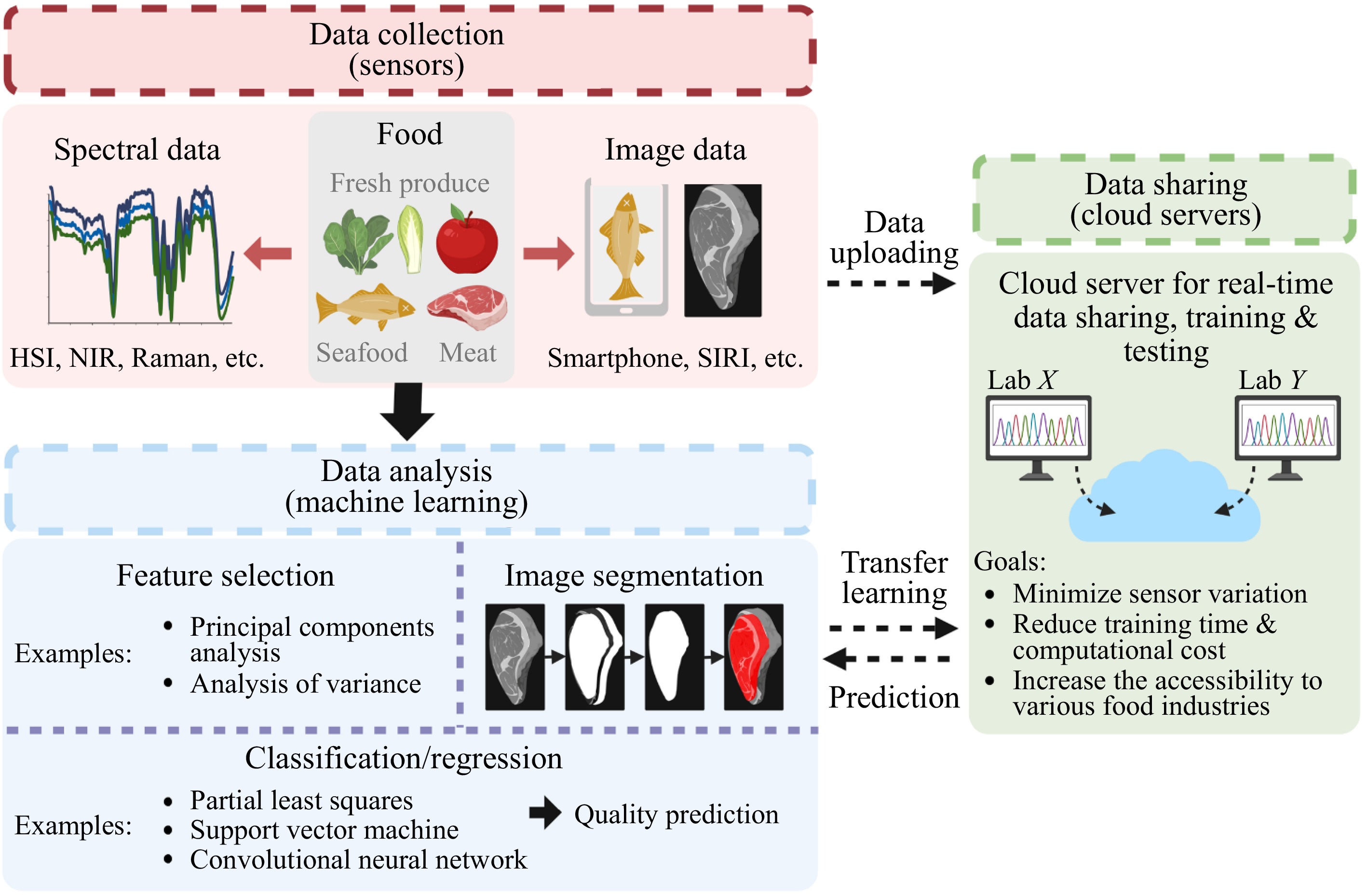

For consumers, the physical and chemical properties of food, such as freshness and nutritional attributes, are considered quality indicators. However, most conventional detection methods to determine these quality indicators are destructive[14]. Mechanical damage to food can lead to quality deterioration and unnecessary waste, which is particularly critical for high-value products and food industries operating with low profit margins[15]. To prevent this issue, optical sensors with machine learning have been applied for food quality assessment as non-destructive methods. Depending on their underlying principles, optical sensors collect signals from various sources, such as visible light, fluorescence, reflectance, and Raman scattering. They enable the rapid and cost-effective detection of targets without damaging food samples[16]. Machine learning models can analyze data from optical sensors to predict specific quality features, such as color, water content, and soluble solids. These applications have been demonstrated for fresh produce, meat, and seafood. Figure 2 illustrates the key components of quality assessment workflow, including data collection using optical sensors, data analysis using machine learning, and data sharing to cloud servers for model training and sample testing.

Figure 2.

Non-destructive food quality assessment using machine learning and optical sensors. The most common data collection methods are hyperspectral imaging (HSI), near-infrared (NIR) spectroscopy, Raman spectroscopy, smartphones, and structured-illumination reflectance imaging (SIRI). For the data analysis, feature selection is applied to select critical spectral features, while image segmentation is used to extract critical image features. A variety of machine learning models (either classification or regression models) are applied for food quality prediction. The data from different testing sites can be shared with cloud servers for model training and unknown sample testing. This figure was created using BioRender.

Fresh produce

-

Due to the short pack-out time and limited shelf life of fresh produce, quality assessment should be both fast and accurate to prevent the release of spoiled or contaminated products to the market[17]. To achieve these goals, HSI has been used to provide chemical and morphology features from spectral and spatial information using illumination ranging from visible to infrared regions. For instance, the freshness of the spinach and Chinese cabbage were classified based on storage time (0, 3, 6, and 9 h) at room temperature using HSI and deep learning classification models[18]. Although the HSI spectra for each storage time overlapped and were indistinguishable by the naked eye, the machine learning model demonstrated the feasibility of predicting the storage periods of the tested vegetables. Convolutional neural network (CNN) and long short-term memory (LSTM) neural network models were applied to efficiently analyze the sequential features of HSI spectra, achieving an accuracy of 85% and 84% for spinach and Chinese cabbage, respectively. The Gradient-weighted Class Activation Mapping (Grad-CAM) was further used to identify the important spectral wavenumbers. The insights obtained from Grad-CAM could help users understand which components changed at specific wavenumbers, thereby validating the model's decisions and excluding the false results caused by confounding factors. Another study introduced an Internet of Things (IoT) cloud system to manage data from multiple devices and employed transfer learning for model training[19]. The IoT refers to a network of interconnected physical devices where these devices collect, exchange, and analyze data over the internet. This connectivity enables the devices to perform automated actions, enhance operational efficiency, and reduce the need for constant human intervention. To evaluate the ripeness of apples, soluble solid contents were predicted using handheld NIR detectors and deep learning[19]. The NIR spectra collected from various devices were transferred to the IoT cloud system, where they were calibrated to minimize device variation. This calibrated data was then used to train the automatic encoder neural network models, achieving an R2 value greater than 0.95. The IoT cloud system could efficiently integrate and analyze big data, regardless of where they are collected. Besides, using transfer learning on the IoT cloud system could accelerate the model training by fixing the pre-trained fore-section of the model. This effective platform saves users' time and computational cost by eliminating the need to train the model from scratch. Furthermore, optical sensors can assess food quality when products are packaged in transparent materials. For instance, a visible to short-wave NIR spectroscopy combined with a neural network-based machine learning model was employed to predict key quality characteristics of fresh dates in modified atmosphere packaging[20]. The model achieved regression R² values of 0.854 for pH, 0.893 for total soluble solids, and 0.881 for sugar content[20]. Overall, these non-destructive approaches showed that deep learning models successfully identified the quality of fruits and vegetables, even in the presence of minor quality changes over short periods. Also, transfer learning enables the minimization of efforts in model training and the application of machine learning models to a variety of foods.

Meat

-

When purchasing meat, consumers consider comprehensive quality cues, such as freshness and appearance[21,22]. Meat quality assessment primarily relies on visual inspection or sensory evaluation to assess color, marbling, and overall appearance. These methods require trained personnel, are expensive and time-consuming, and are prone to variability between inspectors[23]. Objective methods such as colorimetry, pH measurement, water-holding capacity tests, and texture analysis are often used in combination to achieve a more comprehensive understanding of meat quality[24,25]. However, these techniques remain time-consuming and cost-intensive, limiting their widespread use. In recent studies, mechanical methods for analyzing meat texture and assessing water and fat contents have been replaced by optical sensor-based methods to maintain sample integrity[26]. For example, a 785-nm Raman spectrometer was applied to determine the effects of freezing and thawing on beef quality[27]. Color changes and water loss were selected as key quality features for predicting beef quality using machine learning models. It is necessary to extract meaningful information from Raman spectra, which often contain noise. Therefore, an optimized number of principal components and important variables was selected based on the lowest root mean square error values to improve the performance of the partial least squares model. By integrating variable selection with the uninformative variable elimination partial least squares (UVE-PLS) model, Raman spectroscopy could predict the color values (i.e., L*, a*, and b*) and water content with an R2 of 0.99. This method overcame traditional methods by eliminating sample destruction.

In addition to spectra-based techniques, imaging techniques are used alongside machine learning to provide information on meat appearance[28]. For example, one study analyzed the muscle and marbling contents as indicators of the taste and flavor of beef using structured illumination reflectance imaging (SIRI) and deep learning models[29]. Conventionally, the marbling assessment is manually conducted by well-trained personnel. Alternatively, collecting and analyzing images with optical sensors and machine learning models could shorten processing time and reduce human errors[29]. The SIRI method generates and obtains signals of reflected multiple sinusoidal patterns by irradiation to improve image resolution and contrast[30]. The regions of beef muscles were differentiated from non-muscle regions using different segmentation models, including Unet++[31], DeepLabv3+[32], and SegFormer[33]. The transformer-based SegFormer model performed the best R2 (i.e., R2 = 0.996) compared to the other two CNN-based models (i.e., Unet++ with an R2 of 0.950, DeepLabv3+ with an R2 of 0.984). After segmentation, the ResNeXt-101 model was able to classify three degrees of beef marbling with a classification accuracy of 88.7%. The classification using segmented muscle images had higher classification accuracy (88.7%) than the one using whole images (84.5%). This accuracy was further improved to 90.9% by applying Minimum Redundancy Maximum Relevance to identify the optimal number of 140 features based on their weights (i.e., the order of importance to the classification).

Seafood

-

Seafood is perishable, making the timely quality assessment crucial. TVBN and K-value are commonly used indicators for evaluating seafood freshness, reflecting protein, and nucleotide degradation. They are typically measured using traditional chemical analysis methods, such as steam distillation method, gas chromatography, or high-performance liquid chromatography (HPLC)[34,35]. These methods involve sample preparation and time-consuming procedures. To replace these destructive methods, a recent study utilized Raman spectroscopy to track the change in chemical bonds related to protein or fat content in sea bass during storage[36]. The freshness of sea bass was assessed using a CNN model that correlated TVBN values with the Raman spectra. Even though deep learning can deal with complex data, the high dimensions and noises of Raman spectra generally reduce prediction accuracy. Thus, this study selected the weighted features using Analysis of Variance (ANOVA), improving classification accuracy from 86.2% to 90.6%[21]. To further understand the key Raman peaks contributing to the classification, Grad-CAM[37] was applied to visualize the weight of each Raman wavenumber. In another study, a smartphone application was developed to predict fish freshness using images of fisheye captured with a smartphone[38]. The opaque appearance of the fish's pupils indicates that the fish is no longer fresh. After obtaining the image data set, feature extraction was performed using two CNN-based models, namely SqueezNet[39], and Visual Geometry Group 19 (VGG19)[40]. Five machine learning models were trained in these features, and the freshness of fish was classified based on the storage date. The classification models included k-nearest neighbor, support vector machine, artificial neural network, logistic regression, and random forest. Artificial neural networks outperformed other models, obtaining classification accuracies of 77.3% and 72.9% with features extracted by VGG19 and SqueezeNet, respectively. This method provides consumers with an option to assess the quality of their food directly using their smartphones.

-

Robotics is an interdisciplinary field focusing on designing, programming, and operating robots. Robots have been applied to the food industry since the 1970s to automate repetitive or physically demanding tasks, thereby reducing human fatigue and increasing production efficiency[41]. Computer vision, a subfield of artificial intelligence that combines optical sensors and machine learning, enables machines to interpret and understand visual information in a way similar to human vision. With the aid of computer vision technology, robots have recently become capable of performing more sophisticated operations. The application of robotics further enhances the automation of both pre- and post-harvest food processing, contributing to high food quality and yield.

Harvesting

-

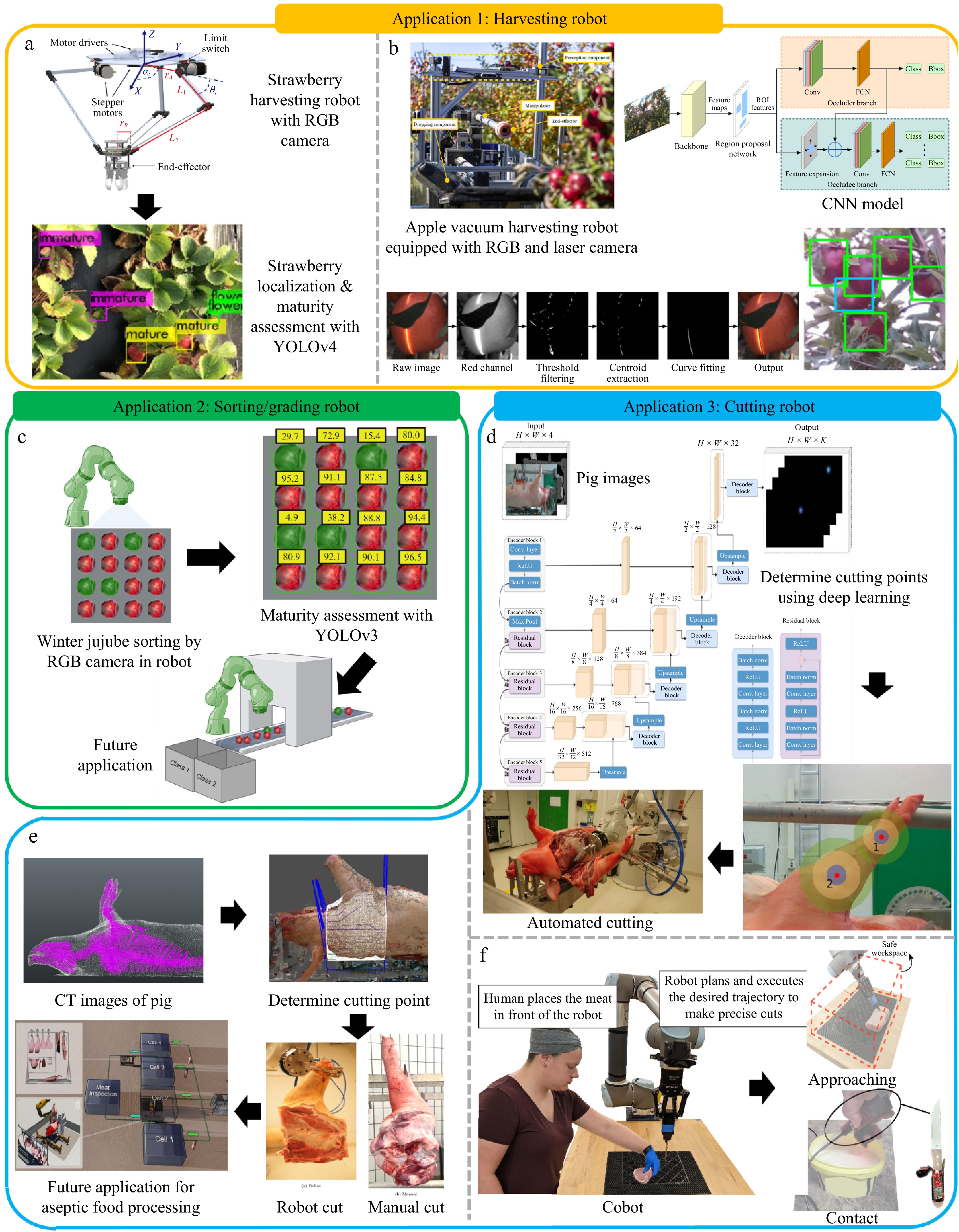

Automated harvesting robots have been developed to precisely harvest fruits after assessing fruit quality levels. Computer vision guides the operation of harvesting robots, which should have accurate object detection to assess fruit quality and to distinguish fruits from environmental backgrounds. As a result, robotic arms locate fruits, control the force to grab them, and prevent them from being damaged. Harvesting robots have been developed alongside computer vision for both soft fruits (e.g., strawberries), and tree fruits (e.g., apples). For example, a five-finger robotic arm was developed to grasp strawberries and rotate them to detach them from the plants (Fig. 3a)[42]. The You Only Look Once v4 (YOLOv4) model was trained to detect mature strawberries using the images captured by a camera on the robotic arm. The YOLO algorithm can detect multiple target objects on the same image from a single analysis, enabling a real-time decision[43]. Five scenarios were tested, ranging from the easiest (fruits isolated from others and unobstructed by obstacles such as leaves) to the most difficult (fruits grouped with others and obstructed by obstacles). The harvesting robot achieved a successful fruit harvesting rate of 71.7% with a cycle time of 7.5 s. Another study has designed a robotic apple harvester by using both RGB-D cameras and laser line scan cameras (Fig. 3b)[44]. The RGB-D cameras provided color information (RGB) and depth information (D) to create a 3D map for locating the apples, while laser line scan cameras provided line-by-line detailed imaging of apple surfaces. The deep learning model with a Mask R-CNN backbone was developed to segment apples from the complex background and provide the 3D locations of detected apples to guide the robot. The vacuum effector then picked the apples to avoid mechanical damage. This robotic harvester was tested in apple orchards, achieving an average harvesting rate of 73.8% and taking 5.97 s per apple. In these studies, the most common reason for harvesting failure was due to the obstacles that hindered the harvesting robot from reaching the apples.

Figure 3.

Representative studies in applying computer vision, a subfield of artificial intelligence (AI) that combines machine learning and optical sensors, to determine food quality and then guide robotics for automated harvesting, sorting, grading, and processing. (a) Five-finger robot for harvesting strawberries using the YOLOv4 model. Reproduced from Tituaña et al.[42]. (b) Apple harvesting robots use a vacuum system and RGB and laser cameras that are controlled by mask-region-based convolutional neural network (Mask-RCNN) model. Reproduced from Zhang et al.[44]. (c) Sorting and grading robot for winter jujube using an RGB camera and the YOLOv3 model[45]. The image was created using BioRender. (d) Prediction of gripping point for automated meat cutting using RGB imaging, U-Net model, and robotics. Reproduced from Manko et al.[47]. (e) Determination of cutting trajectories using computed tomography (CT) imaging and U-Net model for aseptic automation system. Reproduced from de Medeiros Esper et al.[48]. (f) Working safety evaluation of meat cutting cobot using proximity sensors and random forest model to prevent undesired contact with human workers. Reproduced from Wright et al.[50]. (a), (b), (d), (e) and (f) are licensed under Creative Commons Attribution 4.0 International License.

Food sorting and grading

-

Manual fruit sorting and grading are subjective and time-consuming. An automated robot equipped with machine learning and optical sensors can enhance accuracy and reduce the burden on humans. For example, a robot was designed to sort winter jujube based on their maturity (Fig. 3c)[45]. Using cameras mounted on the robotic arms, the robot observed winter jujubes on a black flat plate from three different angles to gather comprehensive information about the fruits, simulating a realistic processing scenario where the fruits move on a conveyor belt. The maturity of winter jujube is associated with the ratio of the red area to the total surface area. Therefore, the YOLOv3 algorithm was trained to classify winter jujubes at three different maturity levels based on the red area ratio, guiding the robotic arms to place the winter jujubes in different packing boxes. The accuracy of the robotic grader was 97.3%, which was validated using manual grading as the ground truth. The processing time was 1.37 s per fruit.

Food processing

-

Automated robotics with computer vision have also been applied to meat production, addressing food safety problems, and improving meat quality[46]. In carcass processing, the appropriate cutting points of meat vary across different types of meat, therefore requiring trained experts. Alternatively, computer vision can assist robots in meat cutting, ensuring uniformity in meat products. Additionally, the production yields are expected to increase due to the rapid processing speed. For example, the U-Net deep learning model was trained on RGB-D images to predict the gripping and cutting points of the pig limbs (Fig. 3d)[47]. The mean average precision and recall of Norwegian-style gripping points were 0.963 and 0.974, respectively, while for Danish-style gripping points, they were 0.984 and 0.994. Another study employed 3D computed tomography (CT) imaging to scan the carcass, resulting in a more accurate trajectory for the cutting robot (Fig. 3e)[48]. U-Net, Mask-region-based convolutional neural network (Mask-RCNN), and ICNet models were developed to predict the arrangement of rib, neck bone, and cartilage for splitting shoulder cuts. The accuracy of each model was 0.904, 0.920, and 0.979, respectively. These studies showed accurate and robust results when cutting targeted sections of pork meats.

Robots not only independently process food but also collaborate with human operators to conduct the same task. This multi-purpose collaborative robot is known as a 'cobot'. The concept of human-robot interaction emerged to understand their activities in shared workplaces, ensuring human safety around cutting robots[49]. The meat cutting performance and operation safety of the cobot were evaluated (Fig. 3f)[50]. The cobot required human assistance to place the pork loin in cutting positions; therefore, it must guarantee the safety of employees. The proximity sensor and inertial measurement unit were equipped on the cutting knife to detect the desired contact on the meat and the undesired contact with human operators, such as human contact area and approaches. The random forest classifier achieved accuracy ranging from 90.43% to 98.14% for classifying butter and meat contact and greater than 99.99% for classifying approaches to safe workplace areas.

-

For food quality assessment, machine learning and optical sensors can achieve rapid and non-destructive testing. Compared to manual quality assessment, optical sensors with machine learning rapidly process comprehensive spectral and image data to provide a real-time decision. The development of transfer learning also facilitates the fine-tuning of machine learning models for adapting to various foods. On the other hand, food industries save time and labor by integrating computer vision technology and robotics for harvesting, sorting, and grading, ensuring high-quality food production. Using independent robots not only reduces the need for repetitive human tasks but also enables an aseptic process to minimize microbial contamination.

To further apply machine learning, optical sensors, and robotics for a smarter food manufacturing system, there are several challenges to be resolved. Firstly, there is a need for standardized, publicly-available databases. Training machine learning models requires sufficient data size. However, food-specific databases are scarce. Only a few food-specific image databases are available, such as Food-101 (containing 101 food categories with 1,000 images each), UEC Food 256 (containing 256 food categories with annotated images and bounding boxes for object detection), and VireoFood-172 (containing 172 food categories) (assessed as of October 2024). As far as we know, only a few HSI data sets are publicly available for food, such as the DeepHS Fruit v2 Dataset, which contains 1,018 labeled and 4,671 unlabeled data. Secondly, the black-box nature of deep learning models makes it difficult for users to completely understand and interpret the results. In the future, developing more explainable machine learning models should be encouraged. Interpretation models can improve the reliability of classification and regression models by explaining the weighted features in the analysis. Thirdly, the performance of independent robots needs to be further enhanced to improve the quality of food products. In fruit harvesting, obstacles such as leaves that hinder that targeting of fruits could cause a decrease in detection accuracy and, thus, lower harvest rates. Flexible robotic arms can reduce harvesting failure by avoiding obstacles and reaching fruits. In meat processing, undesirable bone fractions or meat loss can be minimized using flexible and adaptive robotic arms to optimize cutting trajectories. Fourthly, ensuring user safety is necessary when applying robots in food industries, especially for those robots that may work collaboratively with human workers. Contact detection sensors can be developed to avoid unexpected contact between the cobot and the operators. Last but not least, previous studies have primarily focused on applications in the fresh produce, meat, and seafood industries. Future research should broaden its focus to include other agri-food sectors, such as grains, baking, and dairy products, to enhance the scope and impact of these technologies.

-

This mini-review discusses the application of non-destructive optical sensors and machine learning for food quality assessment, as well as the application of computer vision and robotics for automating the processing of high-quality food. Machine learning models can extract and learn features from high-dimensional data and accurately predict various food quality. To develop an optimal machine learning model, algorithms should be selected and compared based on research questions, as well as the size and nature of dataset. The model performance also depends on factors such as the optimization of hyperparameters[51]. These advantages allow real-time quality evaluation and have the potential to automate food harvesting, sorting, and grading. Data transferring systems and standardized collecting criteria are needed in future studies to ensure the high quantity and quality of data. The advanced object detection algorithms and robotics mechanisms can be further improved to provide an automated pre- and post-harvest process.

This work was financially supported by the U.S. Department of Agriculture's National Institute of Food and Agriculture (USDA-NIFA) Capacity Building Grants for Non-Land-Grant Colleges of Agriculture Program (Grant No. 2024-70001-43485) and Oregon State University Startup Grant.

-

The authors confirm their contribution to the paper as follows: writing – original draft: Lee IH; writing – review & editing, visualization: Lee IH, Ma L; conceptualization, supervision, project administration, funding acquisition: Ma L. Both authors reviewed and approved the final version of the manuscript.

-

Data sharing is not applicable to this mini-review article as no datasets have been generated or analyzed.

-

The authors declare that they have no conflict of interest.

- Copyright: © 2025 by the author(s). Published by Maximum Academic Press on behalf of China Agricultural University, Zhejiang University and Shenyang Agricultural University. This article is an open access article distributed under Creative Commons Attribution License (CC BY 4.0), visit https://creativecommons.org/licenses/by/4.0/.

-

About this article

Cite this article

Lee IH, Ma L. 2025. Integrating machine learning, optical sensors, and robotics for advanced food quality assessment and food processing. Food Innovation and Advances 4(1): 65−72 doi: 10.48130/fia-0025-0007

Integrating machine learning, optical sensors, and robotics for advanced food quality assessment and food processing

- Received: 22 October 2024

- Revised: 28 November 2024

- Accepted: 07 January 2025

- Published online: 20 February 2025

Abstract: Machine learning, in combination with optical sensing, extracts key features from high-dimensional data for non-destructive food quality assessment. This approach overcomes the limitations of traditional destructive and labor-intensive methods, facilitating real-time decision-making for food quality profiling and robotic handling. This mini-review highlights various optical techniques integrated with machine learning for assessing food quality, including chemical profiling methods such as near-infrared, Raman, and hyperspectral imaging spectroscopy, as well as visual analysis such as RGB imaging. In addition, the review presents the application of robotics and computer vision techniques to assess food quality and then drives the automation of food harvesting, grading, and processing. Lastly, the review discusses current challenges and opportunities for future research.

-

Key words:

- Food quality /

- Artificial intelligence /

- Optical sensors /

- Deep learning /

- Robotics /

- Automation